Kubernetes Cluster API

Cluster API is a Kubernetes sub-project focused on providing declarative APIs and tooling to simplify provisioning, upgrading, and operating multiple Kubernetes clusters.

Started by the Kubernetes Special Interest Group (SIG) Cluster Lifecycle, the Cluster API project uses Kubernetes-style APIs and patterns to automate cluster lifecycle management for platform operators. The supporting infrastructure, like virtual machines, networks, load balancers, and VPCs, as well as the Kubernetes cluster configuration are all defined in the same way that application developers operate deploying and managing their workloads. This enables consistent and repeatable cluster deployments across a wide variety of infrastructure environments.

⚠️ Breaking Changes ⚠️

In order to use the ClusterClass (alpha) experimental feature the Kubernetes Version for the management cluster must be >= 1.22.0.

Feature gate name: ClusterTopology

Variable name to enable/disable the feature gate: CLUSTER_TOPOLOGY

Additional documentation:

- Background information: ClusterClass and Managed Topologies CAEP

- For ClusterClass authors:

- Writing a ClusterClass

- Changing a ClusterClass

- Publishing a ClusterClass for clusterctl usage: clusterctl Provider contract

- For Cluster operators:

- Creating a Cluster: Quick Start guide Please note that the experience for creating a Cluster using ClusterClass is very similar to the one for creating a standalone Cluster. Infrastructure providers supporting ClusterClass provide Cluster templates leveraging this feature (e.g the Docker infrastructure provider has a development-topology template).

- Operating a managed Cluster

- Planning topology rollouts: clusterctl alpha topology plan

Writing a ClusterClass

A ClusterClass becomes more useful and valuable when it can be used to create many Cluster of a similar shape. The goal of this document is to explain how ClusterClasses can be written in a way that they are flexible enough to be used in as many Clusters as possible by supporting variants of the same base Cluster shape.

Table of Contents

- Basic ClusterClass

- ClusterClass with MachineHealthChecks

- ClusterClass with patches

- ClusterClass with custom naming strategies

- Advanced features of ClusterClass with patches

- JSON patches tips & tricks

Basic ClusterClass

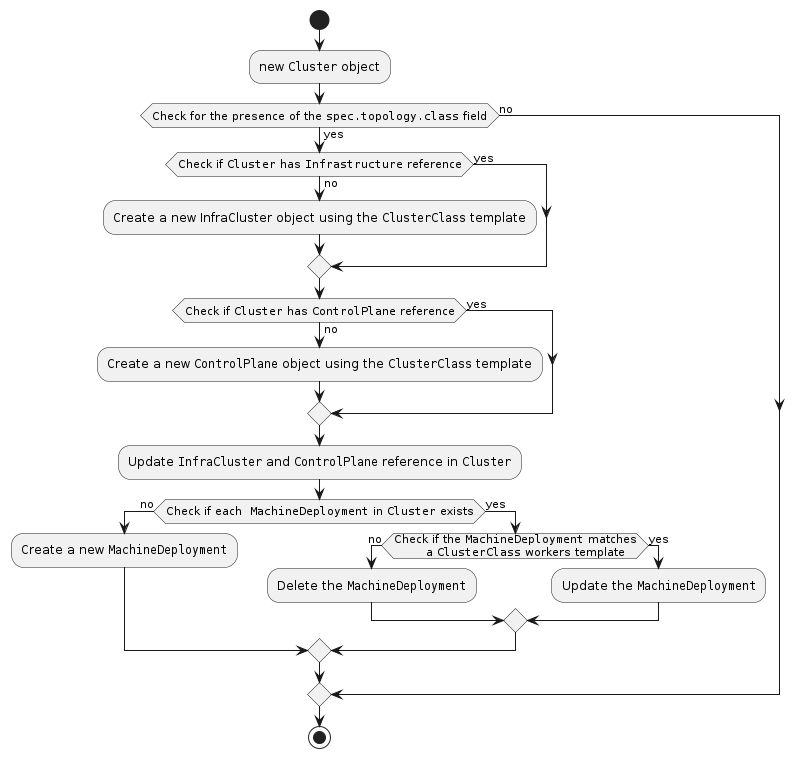

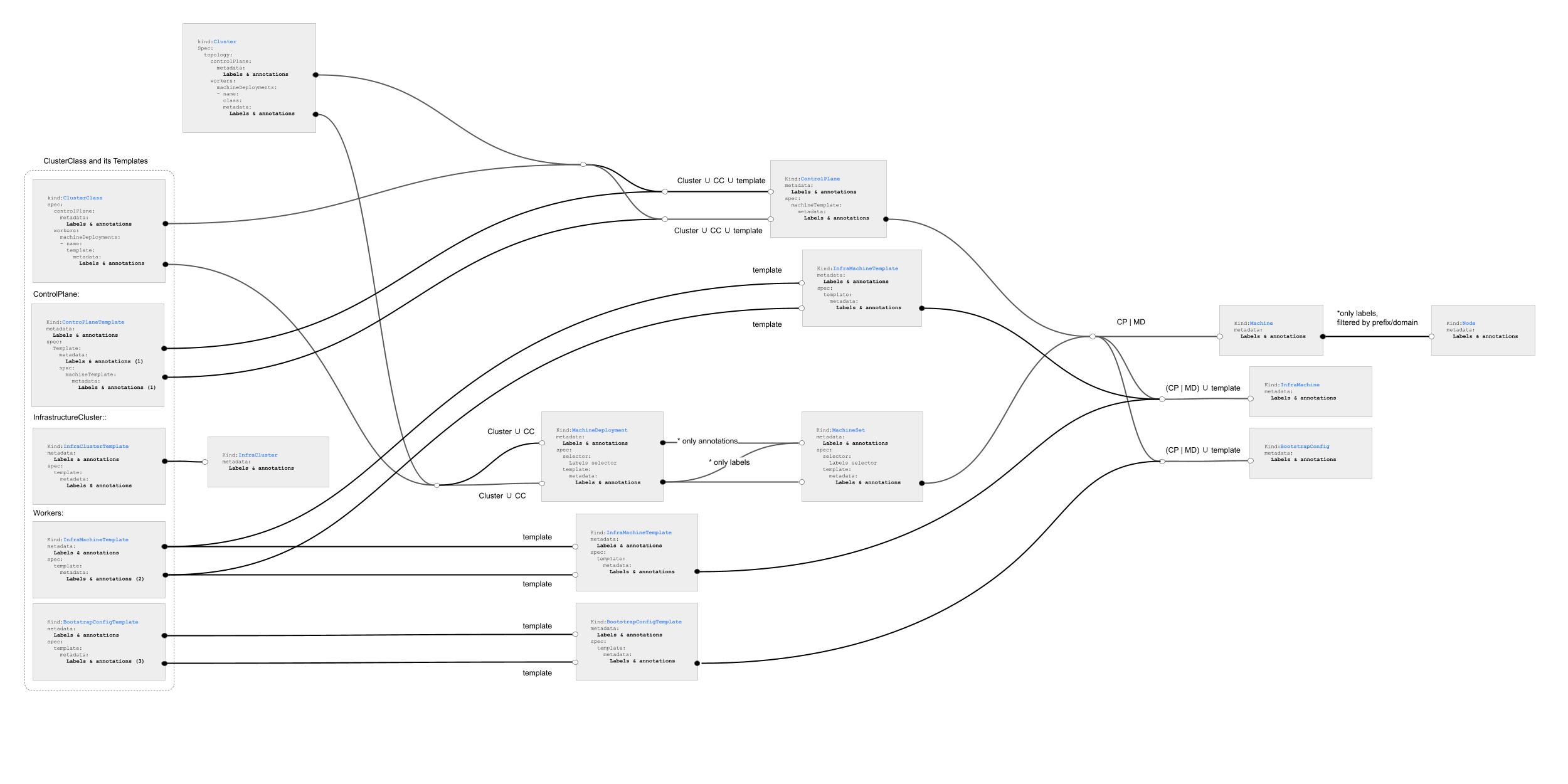

The following example shows a basic ClusterClass. It contains templates to shape the control plane, infrastructure and workers of a Cluster. When a Cluster is using this ClusterClass, the templates are used to generate the objects of the managed topology of the Cluster.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

controlPlane:

ref:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: KubeadmControlPlaneTemplate

name: docker-clusterclass-v0.1.0

namespace: default

machineInfrastructure:

ref:

kind: DockerMachineTemplate

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

name: docker-clusterclass-v0.1.0

namespace: default

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerClusterTemplate

name: docker-clusterclass-v0.1.0-control-plane

namespace: default

workers:

machineDeployments:

- class: default-worker

template:

bootstrap:

ref:

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

name: docker-clusterclass-v0.1.0-default-worker

namespace: default

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerMachineTemplate

name: docker-clusterclass-v0.1.0-default-worker

namespace: default

The following example shows a Cluster using this ClusterClass. In this case a KubeadmControlPlane

with the corresponding DockerMachineTemplate, a DockerCluster and a MachineDeployment with

the corresponding KubeadmConfigTemplate and DockerMachineTemplate will be created. This basic

ClusterClass is already very flexible. Via the topology on the Cluster the following can be configured:

.spec.topology.version: the Kubernetes version of the Cluster.spec.topology.controlPlane: ControlPlane replicas and their metadata.spec.topology.workers: MachineDeployments and their replicas, metadata and failure domain

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: my-docker-cluster

spec:

topology:

class: docker-clusterclass-v0.1.0

version: v1.22.4

controlPlane:

replicas: 3

metadata:

labels:

cpLabel: cpLabelValue

annotations:

cpAnnotation: cpAnnotationValue

workers:

machineDeployments:

- class: default-worker

name: md-0

replicas: 4

metadata:

labels:

mdLabel: mdLabelValue

annotations:

mdAnnotation: mdAnnotationValue

failureDomain: region

Best practices:

- The ClusterClass name should be generic enough to make sense across multiple clusters, i.e. a name which corresponds to a single Cluster, e.g. “my-cluster”, is not recommended.

- Try to keep the ClusterClass names short and consistent (if you publish multiple ClusterClasses).

- As a ClusterClass usually evolves over time and you might want to rebase Clusters from one version of a ClusterClass to another, consider including a version suffix in the ClusterClass name. For more information about changing a ClusterClass please see: Changing a ClusterClass.

- Prefix the templates used in a ClusterClass with the name of the ClusterClass.

- Don’t reuse the same template in multiple ClusterClasses. This is automatically taken care of by prefixing the templates with the name of the ClusterClass.

For a full example ClusterClass for CAPD you can take a look at clusterclass-quickstart.yaml (which is also used in the CAPD quickstart with ClusterClass).

Tip: clusterctl alpha topology plan

The clusterctl alpha topology plan command can be used to test ClusterClasses; the output will show

you how the resulting Cluster will look like, but without actually creating it.

For more details please see: clusterctl alpha topology plan.

ClusterClass with MachinePools

ClusterClass also supports MachinePool workers. They work very similar to MachineDeployments. MachinePools can be specified in the ClusterClass template under the workers section like so:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

workers:

machinePools:

- class: default-worker

template:

bootstrap:

ref:

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

name: quick-start-default-worker-bootstraptemplate

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerMachinePoolTemplate

name: quick-start-default-worker-machinepooltemplate

They can then be similarly defined as workers in the cluster template like so:

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: my-docker-cluster

spec:

topology:

workers:

machinePools:

- class: default-worker

name: mp-0

replicas: 4

metadata:

labels:

mpLabel: mpLabelValue

annotations:

mpAnnotation: mpAnnotationValue

failureDomain: region

ClusterClass with MachineHealthChecks

MachineHealthChecks can be configured in the ClusterClass for the control plane and for a

MachineDeployment class. The following configuration makes sure a MachineHealthCheck is

created for the control plane and for every MachineDeployment using the default-worker class.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

controlPlane:

...

machineHealthCheck:

maxUnhealthy: 33%

nodeStartupTimeout: 15m

unhealthyConditions:

- type: Ready

status: Unknown

timeout: 300s

- type: Ready

status: "False"

timeout: 300s

workers:

machineDeployments:

- class: default-worker

...

machineHealthCheck:

unhealthyRange: "[0-2]"

nodeStartupTimeout: 10m

unhealthyConditions:

- type: Ready

status: Unknown

timeout: 300s

- type: Ready

status: "False"

timeout: 300s

ClusterClass with patches

As shown above, basic ClusterClasses are already very powerful. But there are cases where more powerful mechanisms are required. Let’s assume you want to manage multiple Clusters with the same ClusterClass, but they require different values for a field in one of the referenced templates of a ClusterClass.

A concrete example would be to deploy Clusters with different registries. In this case,

every cluster needs a Cluster-specific value for .spec.kubeadmConfigSpec.clusterConfiguration.imageRepository

in KubeadmControlPlane. Use cases like this can be implemented with ClusterClass patches.

Defining variables in the ClusterClass

The following example shows how variables can be defined in the ClusterClass. A variable definition specifies the name and the schema of a variable and if it is required. The schema defines how a variable is defaulted and validated. It supports a subset of the schema of CRDs. For more information please see the godoc.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

variables:

- name: imageRepository

required: true

schema:

openAPIV3Schema:

type: string

description: ImageRepository is the container registry to pull images from.

default: registry.k8s.io

example: registry.k8s.io

Supported types

The following basic types are supported: string, integer, number and boolean. We are also

supporting complex types, please see the complex variable types section.

Defining patches in the ClusterClass

The variable can then be used in a patch to set a field on a template referenced in the ClusterClass.

The selector specifies on which template the patch should be applied. jsonPatches specifies which JSON

patches should be applied to that template. In this case we set the imageRepository field of the

KubeadmControlPlaneTemplate to the value of the variable imageRepository. For more information

please see the godoc.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

patches:

- name: imageRepository

definitions:

- selector:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: KubeadmControlPlaneTemplate

matchResources:

controlPlane: true

jsonPatches:

- op: add

path: /spec/template/spec/kubeadmConfigSpec/clusterConfiguration/imageRepository

valueFrom:

variable: imageRepository

Writing JSON patches

- Only fields below

/speccan be patched. - Only

add,removeandreplaceoperations are supported. - It’s only possible to append and prepend to arrays. Insertions at a specific index are not supported.

- Be careful, appending or prepending an array variable to an array leads to a nested array (for more details please see this issue).

Setting variable values in the Cluster

After creating a ClusterClass with a variable definition, the user can now provide a value for the variable in the Cluster as in the example below.

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: my-docker-cluster

spec:

topology:

...

variables:

- name: imageRepository

value: my.custom.registry

Variable defaulting

If the user does not set the value, but the corresponding variable definition in ClusterClass has a default value, the value is automatically added to the variables list.

ClusterClass with custom naming strategies

The controller needs to generate names for new objects when a Cluster is getting created from a ClusterClass. These names have to be unique for each namespace. The naming strategy enables this by concatenating the cluster name with a random suffix.

It is possible to provide a custom template for the name generation of ControlPlane, MachineDeployment and MachinePool objects.

The generated names must comply with the RFC 1123 standard.

Defining a custom naming strategy for ControlPlane objects

The naming strategy for ControlPlane supports the following properties:

template: Custom template which is used when generating the name of the ControlPlane object.

The following variables can be referenced in templates:

.cluster.name: The name of the cluster object..random: A random alphanumeric string, without vowels, of length 5.

Example which would match the default behavior:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

controlPlane:

...

namingStrategy:

template: "{{ .cluster.name }}-{{ .random }}"

...

Defining a custom naming strategy for MachineDeployment objects

The naming strategy for MachineDeployments supports the following properties:

template: Custom template which is used when generating the name of the MachineDeployment object.

The following variables can be referenced in templates:

.cluster.name: The name of the cluster object..random: A random alphanumeric string, without vowels, of length 5..machineDeployment.topologyName: The name of the MachineDeployment topology (Cluster.spec.topology.workers.machineDeployments[].name)

Example which would match the default behavior:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

controlPlane:

...

workers:

machineDeployments:

- class: default-worker

...

namingStrategy:

template: "{{ .cluster.name }}-{{ .machineDeployment.topologyName }}-{{ .random }}"

Defining a custom naming strategy for MachinePool objects

The naming strategy for MachinePools supports the following properties:

template: Custom template which is used when generating the name of the MachinePool object.

The following variables can be referenced in templates:

.cluster.name: The name of the cluster object..random: A random alphanumeric string, without vowels, of length 5..machinePool.topologyName: The name of the MachinePool topology (Cluster.spec.topology.workers.machinePools[].name).

Example which would match the default behavior:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

controlPlane:

...

workers:

machinePools:

- class: default-worker

...

namingStrategy:

template: "{{ .cluster.name }}-{{ .machinePool.topologyName }}-{{ .random }}"

Advanced features of ClusterClass with patches

This section will explain more advanced features of ClusterClass patches.

MachineDeployment & MachinePool variable overrides

If you want to use many variations of MachineDeployments in Clusters, you can either define a MachineDeployment class for every variation or you can define patches and variables to make a single MachineDeployment class more flexible. The same applies for MachinePools.

In the following example we make the instanceType of a AWSMachineTemplate customizable.

First we define the workerMachineType variable and the corresponding patch:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: aws-clusterclass-v0.1.0

spec:

...

variables:

- name: workerMachineType

required: true

schema:

openAPIV3Schema:

type: string

default: t3.large

patches:

- name: workerMachineType

definitions:

- selector:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: AWSMachineTemplate

matchResources:

machineDeploymentClass:

names:

- default-worker

jsonPatches:

- op: add

path: /spec/template/spec/instanceType

valueFrom:

variable: workerMachineType

---

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: AWSMachineTemplate

metadata:

name: aws-clusterclass-v0.1.0-default-worker

spec:

template:

spec:

# instanceType: workerMachineType will be set by the patch.

iamInstanceProfile: "nodes.cluster-api-provider-aws.sigs.k8s.io"

---

...

In the Cluster resource the workerMachineType variable can then be set cluster-wide and

it can also be overridden for an individual MachineDeployment or MachinePool.

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: my-aws-cluster

spec:

...

topology:

class: aws-clusterclass-v0.1.0

version: v1.22.0

controlPlane:

replicas: 3

workers:

machineDeployments:

- class: "default-worker"

name: "md-small-workers"

replicas: 3

variables:

overrides:

# Overrides the cluster-wide value with t3.small.

- name: workerMachineType

value: t3.small

# Uses the cluster-wide value t3.large.

- class: "default-worker"

name: "md-large-workers"

replicas: 3

variables:

- name: workerMachineType

value: t3.large

Builtin variables

In addition to variables specified in the ClusterClass, the following builtin variables can be referenced in patches:

builtin.cluster.{name,namespace,uid}builtin.cluster.topology.{version,class}builtin.cluster.network.{serviceDomain,services,pods,ipFamily}- Note: ipFamily is deprecated and will be removed in a future release. see https://github.com/kubernetes-sigs/cluster-api/issues/7521.

builtin.controlPlane.{replicas,version,name,metadata.labels,metadata.annotations}- Please note, these variables are only available when patching control plane or control plane machine templates.

builtin.controlPlane.machineTemplate.infrastructureRef.name- Please note, these variables are only available when using a control plane with machines and when patching control plane or control plane machine templates.

builtin.machineDeployment.{replicas,version,class,name,topologyName,metadata.labels,metadata.annotations}- Please note, these variables are only available when patching the templates of a MachineDeployment

and contain the values of the current

MachineDeploymenttopology.

- Please note, these variables are only available when patching the templates of a MachineDeployment

and contain the values of the current

builtin.machineDeployment.{infrastructureRef.name,bootstrap.configRef.name}- Please note, these variables are only available when patching the templates of a MachineDeployment

and contain the values of the current

MachineDeploymenttopology.

- Please note, these variables are only available when patching the templates of a MachineDeployment

and contain the values of the current

builtin.machinePool.{replicas,version,class,name,topologyName,metadata.labels,metadata.annotations}- Please note, these variables are only available when patching the templates of a MachinePool

and contain the values of the current

MachinePooltopology.

- Please note, these variables are only available when patching the templates of a MachinePool

and contain the values of the current

builtin.machinePool.{infrastructureRef.name,bootstrap.configRef.name}- Please note, these variables are only available when patching the templates of a MachinePool

and contain the values of the current

MachinePooltopology.

- Please note, these variables are only available when patching the templates of a MachinePool

and contain the values of the current

Builtin variables can be referenced just like regular variables, e.g.:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

patches:

- name: clusterName

definitions:

- selector:

...

jsonPatches:

- op: add

path: /spec/template/spec/kubeadmConfigSpec/clusterConfiguration/controllerManager/extraArgs/cluster-name

valueFrom:

variable: builtin.cluster.name

Tips & Tricks

Builtin variables can be used to dynamically calculate image names. The version used in the patch

will always be the same as the one we set in the corresponding MachineDeployment or MachinePool

(works the same way with .builtin.controlPlane.version).

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

patches:

- name: customImage

description: "Sets the container image that is used for running dockerMachines."

definitions:

- selector:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerMachineTemplate

matchResources:

machineDeploymentClass:

names:

- default-worker

jsonPatches:

- op: add

path: /spec/template/spec/customImage

valueFrom:

template: |

kindest/node:{{ .builtin.machineDeployment.version }}

Complex variable types

Variables can also be objects, maps and arrays. An object is specified with the type object and

by the schemas of the fields of the object. A map is specified with the type object and the schema

of the map values. An array is specified via the type array and the schema of the array items.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

variables:

- name: httpProxy

schema:

openAPIV3Schema:

type: object

properties:

# Schema of the url field.

url:

type: string

# Schema of the noProxy field.

noProxy:

type: string

- name: mdConfig

schema:

openAPIV3Schema:

type: object

additionalProperties:

# Schema of the map values.

type: object

properties:

osImage:

type: string

- name: dnsServers

schema:

openAPIV3Schema:

type: array

items:

# Schema of the array items.

type: string

Objects, maps and arrays can be used in patches either directly by referencing the variable name, or by accessing individual fields. For example:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

jsonPatches:

- op: add

path: /spec/template/spec/httpProxy/url

valueFrom:

# Use the url field of the httpProxy variable.

variable: httpProxy.url

- op: add

path: /spec/template/spec/customImage

valueFrom:

# Use the osImage field of the mdConfig variable for the current MD class.

template: "{{ (index .mdConfig .builtin.machineDeployment.class).osImage }}"

- op: add

path: /spec/template/spec/dnsServers

valueFrom:

# Use the entire dnsServers array.

variable: dnsServers

- op: add

path: /spec/template/spec/dnsServer

valueFrom:

# Use the first item of the dnsServers array.

variable: dnsServers[0]

Tips & Tricks

Complex variables can be used to make references in templates configurable, e.g. the identityRef used in AzureCluster.

Of course it’s also possible to only make the name of the reference configurable, including restricting the valid values

to a pre-defined enum.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: azure-clusterclass-v0.1.0

spec:

...

variables:

- name: clusterIdentityRef

schema:

openAPIV3Schema:

type: object

properties:

kind:

type: string

name:

type: string

Even if OpenAPI schema allows defining free form objects, e.g.

variables:

- name: freeFormObject

schema:

openAPIV3Schema:

type: object

User should be aware that the lack of the validation of users provided data could lead to problems when those values are used in patch or when the generated templates are created (see e.g. 6135).

As a consequence we recommend avoiding this practice while we are considering alternatives to make it explicit for the ClusterClass authors to opt-in in this feature, thus accepting the implied risks.

Using variable values in JSON patches

We already saw above that it’s possible to use variable values in JSON patches. It’s also possible to calculate values via Go templating or to use hard-coded values.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

patches:

- name: etcdImageTag

definitions:

- selector:

...

jsonPatches:

- op: add

path: /spec/template/spec/kubeadmConfigSpec/clusterConfiguration/etcd

valueFrom:

# This template is first rendered with Go templating, then parsed by

# a YAML/JSON parser and then used as value of the JSON patch.

# For example, if the variable etcdImageTag is set to `3.5.1-0` the

# .../clusterConfiguration/etcd field will be set to:

# {"local": {"imageTag": "3.5.1-0"}}

template: |

local:

imageTag: {{ .etcdImageTag }}

- name: imageRepository

definitions:

- selector:

...

jsonPatches:

- op: add

path: /spec/template/spec/kubeadmConfigSpec/clusterConfiguration/imageRepository

# This hard-coded value is used directly as value of the JSON patch.

value: "my.custom.registry"

Variable paths

- Paths can be used in

.valueFrom.templateand.valueFrom.variableto access nested fields of arrays and objects. .is used to access a field of an object, e.g.httpProxy.url.[i]is used to access an array element, e.g.dnsServers[0].- Because of the way Go templates work, the paths in templates have to start with a dot.

Tips & Tricks

Templates can be used to implement defaulting behavior during JSON patch value calculation. This can be used if the simple constant default value which can be specified in the schema is not enough.

valueFrom:

# If .vnetName is set, it is used. Otherwise, we will use `{{.builtin.cluster.name}}-vnet`.

template: "{{ if .vnetName }}{{.vnetName}}{{else}}{{.builtin.cluster.name}}-vnet{{end}}"

When writing templates, a subset of functions from the Sprig library can be used to

write expressions, e.g., {{ .name | upper }}. Only functions that are guaranteed to evaluate to the same result

for a given input are allowed (e.g. upper or max can be used, while now or randAlpha cannot be used).

Optional patches

Patches can also be conditionally enabled. This can be done by configuring a Go template via enabledIf.

The patch is then only applied if the Go template evaluates to true. In the following example the httpProxy

patch is only applied if the httpProxy variable is set (and not empty).

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: docker-clusterclass-v0.1.0

spec:

...

variables:

- name: httpProxy

schema:

openAPIV3Schema:

type: string

patches:

- name: httpProxy

enabledIf: "{{ if .httpProxy }}true{{end}}"

definitions:

...

Tips & Tricks:

Hard-coded values can be used to test the impact of a patch during development, gradually roll out patches, etc. .

enabledIf: false

A boolean variable can be used to enable/disable a patch (or “feature”). This can have opt-in or opt-out behavior depending on the default value of the variable.

enabledIf: "{{ .httpProxyEnabled }}"

Of course the same is possible by adding a boolean variable to a configuration object.

enabledIf: "{{ .httpProxy.enabled }}"

Builtin variables can be leveraged to apply a patch only for a specific Kubernetes version.

enabledIf: '{{ semverCompare "1.21.1" .builtin.controlPlane.version }}'

With semverCompare and coalesce a feature can be enabled in newer versions of Kubernetes for both KubeadmConfigTemplate and KubeadmControlPlane.

enabledIf: '{{ semverCompare "^1.22.0" (coalesce .builtin.controlPlane.version .builtin.machineDeployment.version )}}'

Builtin Variables

Please be aware that while you can use builtin variables, if you use for example a MachineDeployment-specific variable this

can mean that patches are only applied to some MachineDeployments. enabledIf is evaluated for each template that should be patched

individually.

Version-aware patches

In some cases the ClusterClass authors want a patch to be computed according to the Kubernetes version in use.

While this is not a problem “per se” and it does not differ from writing any other patch, it is important to keep in mind that there could be different Kubernetes version in a Cluster at any time, all of them accessible via built in variables:

builtin.cluster.topology.versiondefines the Kubernetes version fromcluster.topology, and it acts as the desired Kubernetes version for the entire cluster. However, during an upgrade workflow it could happen that some objects in the Cluster are still at the older version.builtin.controlPlane.version, represent the desired version for the control plane object; usually this version changes immediately aftercluster.topology.versionis updated (unless there are other operations in progress preventing the upgrade to start).builtin.machineDeployment.version, represent the desired version for each specific MachineDeployment object; this version changes only after the upgrade for the control plane is completed, and in case of many MachineDeployments in the same cluster, they are upgraded sequentially.builtin.machinePool.version, represent the desired version for each specific MachinePool object; this version changes only after the upgrade for the control plane is completed, and in case of many MachinePools in the same cluster, they are upgraded sequentially.

This info should provide the bases for developing version-aware patches, allowing the patch author to determine when a patch should adapt to the new Kubernetes version by choosing one of the above variables. In practice the following rules applies to the most common use cases:

- When developing a version-aware patch for the control plane,

builtin.controlPlane.versionmust be used. - When developing a version-aware patch for MachineDeployments,

builtin.machineDeployment.versionmust be used. - When developing a version-aware patch for MachinePools,

builtin.machinePool.versionmust be used.

Tips & Tricks:

Sometimes users need to define variables to be used by version-aware patches, and in this case it is important to keep in mind that there could be different Kubernetes versions in a Cluster at any time.

A simple approach to solve this problem is to define a map of version-aware variables, with the key of each item being the Kubernetes version. Patch could then use the proper builtin variables as a lookup entry to fetch the corresponding values for the Kubernetes version in use by each object.

JSON patches tips & tricks

JSON patches specification RFC6902 requires that the target of add operation must exist.

As a consequence ClusterClass authors should pay special attention when the following conditions apply in order to prevent errors when a patch is applied:

- the patch tries to

adda value to an array (which is a slice in the corresponding go struct) - the slice was defined with

omitempty - the slice currently does not exist

A workaround in this particular case is to create the array in the patch instead of adding to the non-existing one. When creating the slice, existing values would be overwritten so this should only be used when it does not exist.

The following example shows both cases to consider while writing a patch for adding a value to a slice.

This patch targets to add a file to the files slice of a KubeadmConfigTemplate which has omitempty set.

This patch requires the key .spec.template.spec.files to exist to succeed.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: my-clusterclass

spec:

...

patches:

- name: add file

definitions:

- selector:

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

jsonPatches:

- op: add

path: /spec/template/spec/files/-

value:

content: Some content.

path: /some/file

---

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

metadata:

name: "quick-start-default-worker-bootstraptemplate"

spec:

template:

spec:

...

files:

- content: Some other content

path: /some/other/file

This patch would overwrite an existing slice at .spec.template.spec.files.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: my-clusterclass

spec:

...

patches:

- name: add file

definitions:

- selector:

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

jsonPatches:

- op: add

path: /spec/template/spec/files

value:

- content: Some content.

path: /some/file

---

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: KubeadmConfigTemplate

metadata:

name: "quick-start-default-worker-bootstraptemplate"

spec:

template:

spec:

...

Changing a ClusterClass

Selecting a strategy

When planning a change to a ClusterClass, users should always take into consideration how those changes might impact the existing Clusters already using the ClusterClass, if any.

There are two strategies for defining how a ClusterClass change rolls out to existing Clusters:

- Roll out ClusterClass changes to existing Cluster in a controlled/incremental fashion.

- Roll out ClusterClass changes to all the existing Cluster immediately.

The first strategy is the recommended choice for people starting with ClusterClass; it requires the users to create a new ClusterClass with the expected changes, and then rebase each Cluster to use the newly created ClusterClass.

By splitting the change to the ClusterClass and its rollout to Clusters into separate steps the user will reduce the risk of introducing unexpected changes on existing Clusters, or at least limit the blast radius of those changes to a small number of Clusters already rebased (in fact it is similar to a canary deployment).

The second strategy listed above instead requires changing a ClusterClass “in place”, which can be simpler and faster than creating a new ClusterClass. However, this approach means that changes are immediately propagated to all the Clusters already using the modified ClusterClass. Any operation involving many Clusters at the same time has intrinsic risks, and it can impact heavily on the underlying infrastructure in case the operation triggers machine rollout across the entire fleet of Clusters.

However, regardless of which strategy you are choosing to implement your changes to a ClusterClass, please make sure to:

- Plan ClusterClass changes before applying them.

- Understand what Compatibility Checks are and how to prevent changes that can lead to non-functional Clusters.

If instead you are interested in understanding more about which kind of

effects you should expect on the Clusters, or if you are interested in additional details

about the internals of the topology reconciler you can start reading the notes in the

Plan ClusterClass changes documentation or looking at the reference

documentation at the end of this page.

Changing ClusterClass templates

Templates are an integral part of a ClusterClass, and thus the same considerations described in the previous paragraph apply. When changing a template referenced in a ClusterClass users should also always plan for how the change should be propagated to the existing Clusters and choose the strategy that best suits expectations.

According to the Cluster API operational practices, the recommended way for updating templates is by template rotation:

- Create a new template

- Update the template reference in the ClusterClass

- Delete the old template

In place template mutations

In case a provider supports in place template mutations, the Cluster API topology controller will adapt to them during the next reconciliation, but the system is not watching for those changes. Meaning, when the underlying template is updated the changes may not be reflected immediately, however they will be picked up during the next full reconciliation. The maximum time for the next full reconciliation is equal to the CAPI controller sync period (defaults to 10 minutes).

Reusing templates across ClusterClasses

As already discussed in writing a cluster class, while it is technically possible to re-use a template across ClusterClasses, this practice is not recommended because it makes it difficult to reason about the impact of changing such a template can have on existing Clusters.

Also in case of changes to the ClusterClass templates, please make sure to:

- Plan ClusterClass changes before applying them.

- Understand what Compatibility Checks are and how to prevent changes that can lead to non-functional Clusters.

You can learn more about this reading the notes in the Plan ClusterClass changes documentation or looking at the reference documentation at the end of this page.

Rebase

Rebasing is an operational practice for transitioning a Cluster from one ClusterClass to another,

and the operation can be triggered by simply changing the value in Cluster.spec.topology.class.

Also in this case, please make sure to:

- Plan ClusterClass changes before applying them.

- Understand what Compatibility Checks are and how to prevent changes that can lead to non-functional Clusters.

You can learn more about this reading the notes in the Plan ClusterClass changes documentation or looking at the reference documentation at the end of this page.

Compatibility Checks

When changing a ClusterClass, the system validates the required changes according to a set of “compatibility rules” in order to prevent changes which would lead to a non-functional Cluster, e.g. changing the InfrastructureProvider from AWS to Azure.

If the proposed changes are evaluated as dangerous, the operation is rejected.

Warning

In the current implementation there are no compatibility rules for changes to provider templates, so you should refer to the provider documentation to avoid potentially dangerous changes on those objects.

For additional info see compatibility rules defined in the ClusterClass proposal.

Planning ClusterClass changes

It is highly recommended to always generate a plan for ClusterClass changes before applying them, no matter if you are creating a new ClusterClass and rebasing Clusters or if you are changing your ClusterClass in place.

The clusterctl tool provides a new alpha command for this operation, clusterctl alpha topology plan.

The output of this command will provide you all the details about how those changes would impact Clusters, but the following notes can help you to understand what you should expect when planning your ClusterClass changes:

-

Users should expect the resources in a Cluster (e.g. MachineDeployments) to behave consistently no matter if a change is applied via a ClusterClass or directly as you do in a Cluster without a ClusterClass. In other words, if someone changes something on a KCP object triggering a control plane Machines rollout, you should expect the same to happen when the same change is applied to the KCP template in ClusterClass.

-

User should expect the Cluster topology to change consistently irrespective of how the change has been implemented inside the ClusterClass or applied to the ClusterClass. In other words, if you change a template field “in place”, or if you rotate the template referenced in the ClusterClass by pointing to a new template with the same field changed, or if you change the same field via a patch, the effects on the Cluster are the same.

See reference for more details.

Reference

Effects on the Clusters

The following table documents the effects each ClusterClass change can have on a Cluster;

Similar considerations apply to changes introduced by changes in Cluster.Topology or by

changes introduced by patches.

NOTE: for people used to operating Cluster API without Cluster Class, it could also help to keep in mind that the underlying objects like control plane and MachineDeployment act in the same way with and without a ClusterClass.

| Changed field | Effects on Clusters |

|---|---|

| infrastructure.ref | Corresponding InfrastructureCluster objects are updated (in place update). |

| controlPlane.metadata | If labels/annotations are added, changed or deleted the ControlPlane objects are updated (in place update). In case of KCP, corresponding controlPlane Machines, KubeadmConfigs and InfrastructureMachines are updated in-place. |

| controlPlane.ref | Corresponding ControlPlane objects are updated (in place update). If updating ControlPlane objects implies changes in the spec, the corresponding ControlPlane Machines are updated accordingly (rollout). |

| controlPlane.machineInfrastructure.ref | If the referenced template has changes only in metadata labels or annotations, the corresponding InfrastructureMachineTemplates are updated (in place update). If the referenced template has changes in the spec: - Corresponding InfrastructureMachineTemplate are rotated (create new, delete old) - Corresponding ControlPlane objects are updated with the reference to the newly created template (in place update) - The corresponding controlPlane Machines are updated accordingly (rollout). |

| controlPlane.nodeDrainTimeout | If the value is changed the ControlPlane object is updated in-place. In case of KCP, the change is propagated in-place to control plane Machines. |

| controlPlane.nodeVolumeDetachTimeout | If the value is changed the ControlPlane object is updated in-place. In case of KCP, the change is propagated in-place to control plane Machines. |

| controlPlane.nodeDeletionTimeout | If the value is changed the ControlPlane object is updated in-place. In case of KCP, the change is propagated in-place to control plane Machines. |

| workers.machineDeployments | If a new MachineDeploymentClass is added, no changes are triggered to the Clusters. If an existing MachineDeploymentClass is changed, effect depends on the type of change (see below). |

| workers.machineDeployments[].template.metadata | If labels/annotations are added, changed or deleted the MachineDeployment objects are updated (in place update) and corresponding worker Machines are updated (in-place). |

| workers.machineDeployments[].template.bootstrap.ref | If the referenced template has changes only in metadata labels or annotations, the corresponding BootstrapTemplates are updated (in place update). If the referenced template has changes in the spec: - Corresponding BootstrapTemplate are rotated (create new, delete old). - Corresponding MachineDeployments objects are updated with the reference to the newly created template (in place update). - The corresponding worker machines are updated accordingly (rollout) |

| workers.machineDeployments[].template.infrastructure.ref | If the referenced template has changes only in metadata labels or annotations, the corresponding InfrastructureMachineTemplates are updated (in place update). If the referenced template has changes in the spec: - Corresponding InfrastructureMachineTemplate are rotated (create new, delete old). - Corresponding MachineDeployments objects are updated with the reference to the newly created template (in place update). - The corresponding worker Machines are updated accordingly (rollout) |

| workers.machineDeployments[].template.nodeDrainTimeout | If the value is changed the MachineDeployment is updated in-place. The change is propagated in-place to the MachineDeployment Machine. |

| workers.machineDeployments[].template.nodeVolumeDetachTimeout | If the value is changed the MachineDeployment is updated in-place. The change is propagated in-place to the MachineDeployment Machine. |

| workers.machineDeployments[].template.nodeDeletionTimeout | If the value is changed the MachineDeployment is updated in-place. The change is propagated in-place to the MachineDeployment Machine. |

| workers.machineDeployments[].template.minReadySeconds | If the value is changed the MachineDeployment is updated in-place. |

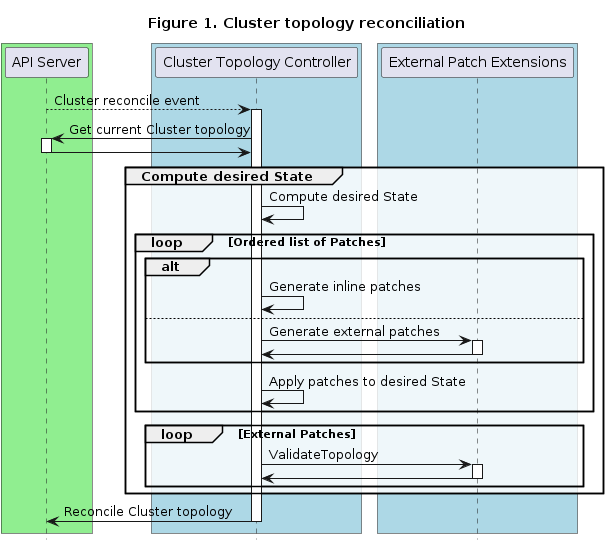

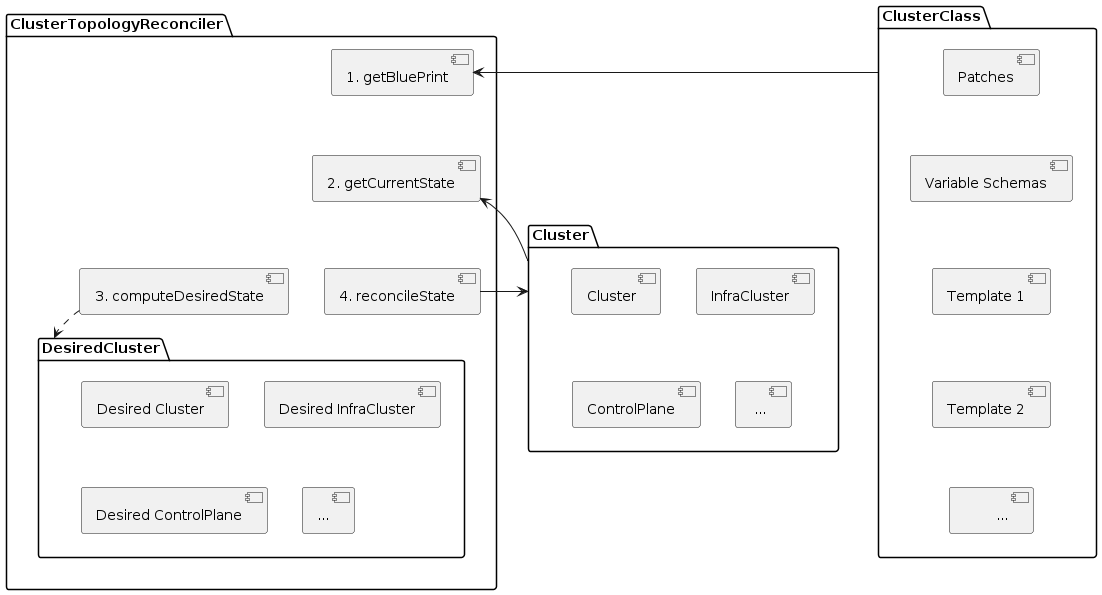

How the topology controller reconciles template fields

The topology reconciler enforces values defined in the ClusterClass templates into the topology owned objects in a Cluster.

More specifically, the topology controller uses Server Side Apply to write/patch topology owned objects; using SSA allows other controllers to co-author the generated objects, like e.g. adding info for subnets in CAPA.

What about patches?

The considerations above apply also when using patches, the only difference being that the set of fields that are enforced should be determined by applying patches on top of the templates.

A corollary of the behaviour described above is that it is technically possible to change fields in the object which are not derived from the templates and patches, but we advise against using the possibility or making ad-hoc changes in generated objects unless otherwise needed for a workaround. It is always preferable to improve ClusterClasses by supporting new Cluster variants in a reusable way.

Operating a managed Cluster

The spec.topology field added to the Cluster object as part of ClusterClass allows changes made on the Cluster to be propagated across all relevant objects. This means the Cluster object can be used as a single point of control for making changes to objects that are part of the Cluster, including the ControlPlane and MachineDeployments.

A managed Cluster can be used to:

- Upgrade a Cluster

- Scale a ControlPlane

- Scale a MachineDeployment

- Add a MachineDeployment

- Use variables in a Cluster

- Rebase a Cluster to a different ClusterClass

- Upgrading Cluster API

- Tips and tricks

Upgrade a Cluster

Using a managed topology the operation to upgrade a Kubernetes cluster is a one-touch operation.

Let’s assume we have created a CAPD cluster with ClusterClass and specified Kubernetes v1.21.2 (as documented in the Quick Start guide). Specifying the version is done when running clusterctl generate cluster. Looking at the cluster, the version of the control plane and the MachineDeployments is v1.21.2.

> kubectl get kubeadmcontrolplane,machinedeployments

NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/clusterclass-quickstart-XXXX clusterclass-quickstart true true 1 1 1 0 2m21s v1.21.2

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

machinedeployment.cluster.x-k8s.io/clusterclass-quickstart-linux-workers-XXXX clusterclass-quickstart 1 1 1 0 Running 2m21s v1.21.2

To update the Cluster the only change needed is to the version field under spec.topology in the Cluster object.

Change 1.21.2 to 1.22.0 as below.

kubectl patch cluster clusterclass-quickstart --type json --patch '[{"op": "replace", "path": "/spec/topology/version", "value": "v1.22.0"}]'

The patch will make the following change to the Cluster yaml:

spec:

topology:

class: quick-start

+ version: v1.22.0

- version: v1.21.2

Important Note: A +2 minor Kubernetes version upgrade is not allowed in Cluster Topologies. This is to align with existing control plane providers, like KubeadmControlPlane provider, that limit a +2 minor version upgrade. Example: Upgrading from 1.21.2 to 1.23.0 is not allowed.

The upgrade will take some time to roll out as it will take place machine by machine with older versions of the machines only being removed after healthy newer versions come online.

To watch the update progress run:

watch kubectl get kubeadmcontrolplane,machinedeployments

After a few minutes the upgrade will be complete and the output will be similar to:

NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/clusterclass-quickstart-XXXX clusterclass-quickstart true true 1 1 1 0 7m29s v1.22.0

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

machinedeployment.cluster.x-k8s.io/clusterclass-quickstart-linux-workers-XXXX clusterclass-quickstart 1 1 1 0 Running 7m29s v1.22.0

Scale a MachineDeployment

When using a managed topology scaling of MachineDeployments, both up and down, should be done through the Cluster topology.

Assume we have created a CAPD cluster with ClusterClass and Kubernetes v1.23.3 (as documented in the Quick Start guide). Initially we should have a MachineDeployment with 3 replicas. Running

kubectl get machinedeployments

Will give us:

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

machinedeployment.cluster.x-k8s.io/capi-quickstart-md-0-XXXX capi-quickstart 3 3 3 0 Running 21m v1.23.3

We can scale up or down this MachineDeployment through the Cluster object by changing the replicas field under /spec/topology/workers/machineDeployments/0/replicas

The 0 in the path refers to the position of the target MachineDeployment in the list of our Cluster topology. As we only have one MachineDeployment we’re targeting the first item in the list under /spec/topology/workers/machineDeployments/.

To change this value with a patch:

kubectl patch cluster capi-quickstart --type json --patch '[{"op": "replace", "path": "/spec/topology/workers/machineDeployments/0/replicas", "value": 1}]'

This patch will make the following changes on the Cluster yaml:

spec:

topology:

workers:

machineDeployments:

- class: default-worker

name: md-0

metadata: {}

+ replicas: 1

- replicas: 3

After a minute the MachineDeployment will have scaled down to 1 replica:

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

capi-quickstart-md-0-XXXXX capi-quickstart 1 1 1 0 Running 25m v1.23.3

As well as scaling a MachineDeployment, Cluster operators can edit the labels and annotations applied to a running MachineDeployment using the Cluster topology as a single point of control.

Add a MachineDeployment

MachineDeployments in a managed Cluster are defined in the Cluster’s topology. Cluster operators can add a MachineDeployment to a living Cluster by adding it to the cluster.spec.topology.workers.machineDeployments field.

Assume we have created a CAPD cluster with ClusterClass and Kubernetes v1.23.3 (as documented in the Quick Start guide). Initially we should have a single MachineDeployment with 3 replicas. Running

kubectl get machinedeployments

Will give us:

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

machinedeployment.cluster.x-k8s.io/capi-quickstart-md-0-XXXX capi-quickstart 3 3 3 0 Running 21m v1.23.3

A new MachineDeployment can be added to the Cluster by adding a new MachineDeployment spec under /spec/topology/workers/machineDeployments/. To do so we can patch our Cluster with:

kubectl patch cluster capi-quickstart --type json --patch '[{"op": "add", "path": "/spec/topology/workers/machineDeployments/-", "value": {"name": "second-deployment", "replicas": 1, "class": "default-worker"} }]'

This patch will make the below changes on the Cluster yaml:

spec:

topology:

workers:

machineDeployments:

- class: default-worker

metadata: {}

replicas: 3

name: md-0

+ - class: default-worker

+ metadata: {}

+ replicas: 1

+ name: second-deployment

After a minute to scale the new MachineDeployment we get:

NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSION

capi-quickstart-md-0-XXXX capi-quickstart 1 1 1 0 Running 39m v1.23.3

capi-quickstart-second-deployment-XXXX capi-quickstart 1 1 1 0 Running 99s v1.23.3

Our second deployment uses the same underlying MachineDeployment class default-worker as our initial deployment. In this case they will both have exactly the same underlying machine templates. In order to modify the templates MachineDeployments are based on take a look at Changing a ClusterClass.

A similar process as that described here - removing the MachineDeployment from cluster.spec.topology.workers.machineDeployments - can be used to delete a running MachineDeployment from an active Cluster.

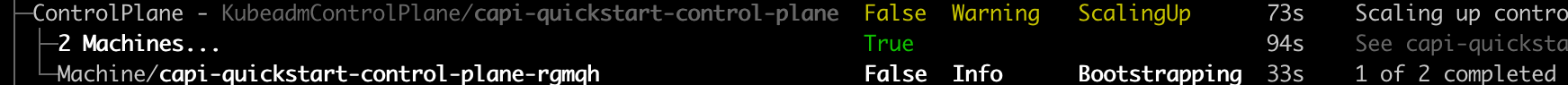

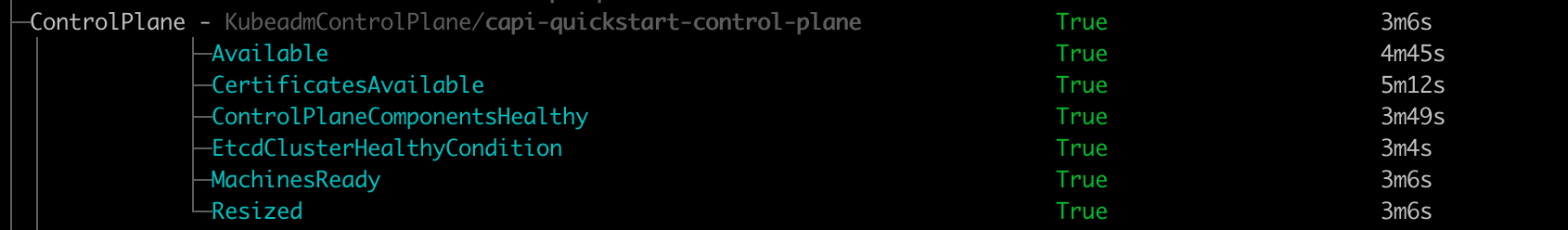

Scale a ControlPlane

When using a managed topology scaling of ControlPlane Machines, where the Cluster is using a topology that includes ControlPlane MachineInfrastructure, should be done through the Cluster topology.

This is done by changing the ControlPlane replicas field at /spec/topology/controlPlane/replica in the Cluster object. The command is:

kubectl patch cluster capi-quickstart --type json --patch '[{"op": "replace", "path": "/spec/topology/controlPlane/replicas", "value": 1}]'

This patch will make the below changes on the Cluster yaml:

spec:

topology:

controlPlane:

metadata: {}

+ replicas: 1

- replicas: 3

As well as scaling a ControlPlane, Cluster operators can edit the labels and annotations applied to a running ControlPlane using the Cluster topology as a single point of control.

Use variables

A ClusterClass can use variables and patches in order to allow flexible customization of Clusters derived from a ClusterClass. Variable definition allows two or more Cluster topologies derived from the same ClusterClass to have different specs, with the differences controlled by variables in the Cluster topology.

Assume we have created a CAPD cluster with ClusterClass and Kubernetes v1.23.3 (as documented in the Quick Start guide). Our Cluster has a variable etcdImageTag as defined in the ClusterClass. The variable is not set on our Cluster. Some variables, depending on their definition in a ClusterClass, may need to be specified by the Cluster operator for every Cluster created using a given ClusterClass.

In order to specify the value of a variable all we have to do is set the value in the Cluster topology.

We can see the current unset variable with:

kubectl get cluster capi-quickstart -o jsonpath='{.spec.topology.variables[1]}'

Which will return something like:

{"name":"etcdImageTag","value":""}

In order to run a different version of etcd in new ControlPlane machines - the part of the spec this variable sets - change the value using the below patch:

kubectl patch cluster capi-quickstart --type json --patch '[{"op": "replace", "path": "/spec/topology/variables/1/value", "value": "3.5.0"}]'

Running the patch makes the following change to the Cluster yaml:

spec:

topology:

variables:

- name: imageRepository

value: registry.k8s.io

- name: etcdImageTag

value: ""

- name: coreDNSImageTag

+ value: "3.5.0"

- value: ""

Retrieving the variable value from the Cluster object, with kubectl get cluster capi-quickstart -o jsonpath='{.spec.topology.variables[1]}' we can see:

{"name":"etcdImageTag","value":"3.5.0"}

Note: Changing the etcd version may have unintended impacts on a running Cluster. For safety the cluster should be reapplied after running the above variable patch.

Rebase a Cluster

To perform more significant changes using a Cluster as a single point of control, it may be necessary to change the ClusterClass that the Cluster is based on. This is done by changing the class referenced in /spec/topology/class.

To read more about changing an underlying class please refer to ClusterClass rebase.

Tips and tricks

Users should always aim at ensuring the stability of the Cluster and of the applications hosted on it while

using spec.topology as a single point of control for making changes to the objects that are part of the Cluster.

Following recommendation apply:

- If possible, avoid concurrent changes to control-plane and/or MachineDeployments to prevent excessive turnover on the underlying infrastructure or bottlenecks in the Cluster trying to move workloads from one machine to the other.

- Keep machine labels and annotation stable, because changing those values requires machines rollouts; also, please note that machine labels and annotation are not propagated to Kubernetes nodes; see metadata propagation.

- While upgrading a Cluster, if possible avoid any other concurrent change to the Cluster; please note that you can rely on version-aware patches to ensure the Cluster adapts to the new Kubernetes version in sync with the upgrade workflow.

For more details about how changes can affect a Cluster, please look at reference.

Effects of concurrent changes

When applying concurrent changes to a Cluster, the topology controller will immediately act in order to reconcile to the desired state, and thus proxy all the required changes to the underlying objects which in turn take action, and this might require rolling out machines (create new, delete old).

As noted above, when executed at scale this might create excessive turnover on the underlying infrastructure or bottlenecks in the Cluster trying to move workloads from one machine to the other.

Additionally, in case of change of the Kubernetes version and other concurrent changes for Machines deployments this could lead to double rollout of the worker nodes:

- The first rollout triggered by the changes to the machine deployments immediately applied to the underlying objects (e.g change of labels).

- The second rollout triggered by the upgrade workflow changing the MachineDeployment version only after the control upgrade is completed (see upgrade a cluster above).

Please note that:

- Cluster API already implements strategies to ensure changes in a Cluster are executed in a safe way under most of the circumstances, including users occasionally not acting according to above best practices;

- The above-mentioned strategies are currently implemented on the abstraction controlling a single set of machines, the control-plane (KCP) or the MachineDeployment;

- In future Managed topologies could be improved by introducing strategies to ensure a higher safety across all abstraction controlling Machines in a Cluster, but this work is currently at its initial stage and user feedback could help in shaping out those improvements.

- Similarly, in future we might consider implementing strategies to controlling changes across many Clusters.

Upgrading Cluster API

There are some special considerations for ClusterClass regarding Cluster API upgrades when the upgrade includes a bump of the apiVersion of infrastructure, bootstrap or control plane provider CRDs.

The recommended approach is to first upgrade Cluster API and then update the apiVersions in the ClusterClass references afterwards. By following above steps, there won’t be any disruptions of the reconciliation as the Cluster topology controller is able to reconcile the Cluster even with the old apiVersions in the ClusterClass.

Note: The apiVersions in ClusterClass cannot be updated before Cluster API because the new apiVersions don’t exist in the management cluster before the Cluster API upgrade.

In general the Cluster topology controller always uses exactly the versions of the CRDs referenced in the ClusterClass.

This means in the following example the Cluster topology controller will always use v1beta1 when reconciling/applying

patches for the infrastructure ref, even if the DockerClusterTemplate already has a v1beta2 apiVersion.

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: quick-start

namespace: default

spec:

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerClusterTemplate

...

Bumping apiVersions in ClusterClass

When upgrading the apiVersions in references in the ClusterClass the corresponding patches have to be changed accordingly. This includes bumping the apiVersion in the patch selector and potentially updating the JSON patch to changes in the new apiVersion of the referenced CRD. The following example shows how to upgrade the ClusterClass in this case.

ClusterClass with the old apiVersion:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: quick-start

spec:

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerClusterTemplate

...

patches:

- name: lbImageRepository

definitions:

- selector:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: DockerClusterTemplate

matchResources:

infrastructureCluster: true

jsonPatches:

- op: add

path: "/spec/template/spec/loadBalancer/imageRepository"

valueFrom:

variable: lbImageRepository

ClusterClass with the new apiVersion:

apiVersion: cluster.x-k8s.io/v1beta1

kind: ClusterClass

metadata:

name: quick-start

spec:

infrastructure:

ref:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta2 # apiVersion updated

kind: DockerClusterTemplate

...

patches:

- name: lbImageRepository

definitions:

- selector:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta2 # apiVersion updated

kind: DockerClusterTemplate

matchResources:

infrastructureCluster: true

jsonPatches:

- op: add

# Path has been updated, as in this example imageRepository has been renamed

# to imageRepo in v1beta2 of DockerClusterTemplate.

path: "/spec/template/spec/loadBalancer/imageRepo"

valueFrom:

variable: lbImageRepository

If external patches are used in the ClusterClass, it has to be ensured that all external patches support the new apiVersion before bumping apiVersions.

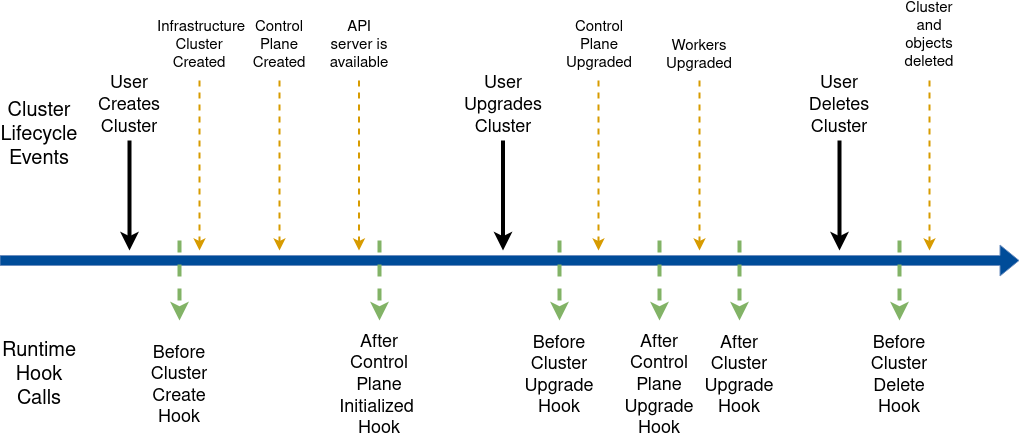

Experimental Feature: Runtime SDK (alpha)

The Runtime SDK feature provides an extensibility mechanism that allows systems, products, and services built on top of Cluster API to hook into a workload cluster’s lifecycle.

All currently implemented hooks require to also enable the ClusterClass feature.

Caution

Please note Runtime SDK is an advanced feature. If implemented incorrectly, a failing Runtime Extension can severely impact the Cluster API runtime.

Feature gate name: RuntimeSDK

Variable name to enable/disable the feature gate: EXP_RUNTIME_SDK

Additional documentation:

- Background information:

- For Runtime Extension developers:

- For Cluster operators:

Implementing Runtime Extensions

Caution

Please note Runtime SDK is an advanced feature. If implemented incorrectly, a failing Runtime Extension can severely impact the Cluster API runtime.

Introduction

As a developer building systems on top of Cluster API, if you want to hook into the Cluster’s lifecycle via a Runtime Hook, you have to implement a Runtime Extension handling requests according to the OpenAPI specification for the Runtime Hook you are interested in.

Runtime Extensions by design are very powerful and flexible, however given that with great power comes great responsibility, a few key consideration should always be kept in mind (more details in the following sections):

- Runtime Extensions are components that should be designed, written and deployed with great caution given that they can affect the proper functioning of the Cluster API runtime.

- Cluster administrators should carefully vet any Runtime Extension registration, thus preventing malicious components from being added to the system.

Please note that following similar practices is already commonly accepted in the Kubernetes ecosystem for Kubernetes API server admission webhooks. Runtime Extensions share the same foundation and most of the same considerations/concerns apply.

Implementation

As mentioned above as a developer building systems on top of Cluster API, if you want to hook in the Cluster’s lifecycle via a Runtime Extension, you have to implement an HTTPS server handling a discovery request and a set of additional requests according to the OpenAPI specification for the Runtime Hook you are interested in.

The following shows a minimal example of a Runtime Extension server implementation:

package main

import (

"context"

"flag"

"net/http"

"os"

"github.com/spf13/pflag"

cliflag "k8s.io/component-base/cli/flag"

"k8s.io/component-base/logs"

logsv1 "k8s.io/component-base/logs/api/v1"

"k8s.io/klog/v2"

ctrl "sigs.k8s.io/controller-runtime"

runtimecatalog "sigs.k8s.io/cluster-api/exp/runtime/catalog"

runtimehooksv1 "sigs.k8s.io/cluster-api/exp/runtime/hooks/api/v1alpha1"

"sigs.k8s.io/cluster-api/exp/runtime/server"

)

var (

// catalog contains all information about RuntimeHooks.

catalog = runtimecatalog.New()

// Flags.

profilerAddress string

webhookPort int

webhookCertDir string

logOptions = logs.NewOptions()

)

func init() {

// Adds to the catalog all the RuntimeHooks defined in cluster API.

_ = runtimehooksv1.AddToCatalog(catalog)

}

// InitFlags initializes the flags.

func InitFlags(fs *pflag.FlagSet) {

// Initialize logs flags using Kubernetes component-base machinery.

logsv1.AddFlags(logOptions, fs)

// Add test-extension specific flags

fs.StringVar(&profilerAddress, "profiler-address", "",

"Bind address to expose the pprof profiler (e.g. localhost:6060)")

fs.IntVar(&webhookPort, "webhook-port", 9443,

"Webhook Server port")

fs.StringVar(&webhookCertDir, "webhook-cert-dir", "/tmp/k8s-webhook-server/serving-certs/",

"Webhook cert dir.")

}

func main() {

// Creates a logger to be used during the main func.

setupLog := ctrl.Log.WithName("setup")

// Initialize and parse command line flags.

InitFlags(pflag.CommandLine)

pflag.CommandLine.SetNormalizeFunc(cliflag.WordSepNormalizeFunc)

pflag.CommandLine.AddGoFlagSet(flag.CommandLine)

// Set log level 2 as default.

if err := pflag.CommandLine.Set("v", "2"); err != nil {

setupLog.Error(err, "failed to set default log level")

os.Exit(1)

}

pflag.Parse()

// Validates logs flags using Kubernetes component-base machinery and applies them

if err := logsv1.ValidateAndApply(logOptions, nil); err != nil {

setupLog.Error(err, "unable to start extension")

os.Exit(1)

}

// Add the klog logger in the context.

ctrl.SetLogger(klog.Background())

// Initialize the golang profiler server, if required.

if profilerAddress != "" {

klog.Infof("Profiler listening for requests at %s", profilerAddress)

go func() {

klog.Info(http.ListenAndServe(profilerAddress, nil))

}()

}

// Create a http server for serving runtime extensions

webhookServer, err := server.New(server.Options{

Catalog: catalog,

Port: webhookPort,

CertDir: webhookCertDir,

})

if err != nil {

setupLog.Error(err, "error creating webhook server")

os.Exit(1)

}

// Register extension handlers.

if err := webhookServer.AddExtensionHandler(server.ExtensionHandler{

Hook: runtimehooksv1.BeforeClusterCreate,

Name: "before-cluster-create",

HandlerFunc: DoBeforeClusterCreate,

}); err != nil {

setupLog.Error(err, "error adding handler")

os.Exit(1)

}

if err := webhookServer.AddExtensionHandler(server.ExtensionHandler{

Hook: runtimehooksv1.BeforeClusterUpgrade,

Name: "before-cluster-upgrade",

HandlerFunc: DoBeforeClusterUpgrade,

}); err != nil {

setupLog.Error(err, "error adding handler")

os.Exit(1)

}

// Setup a context listening for SIGINT.

ctx := ctrl.SetupSignalHandler()

// Start the https server.

setupLog.Info("Starting Runtime Extension server")

if err := webhookServer.Start(ctx); err != nil {

setupLog.Error(err, "error running webhook server")

os.Exit(1)

}

}

func DoBeforeClusterCreate(ctx context.Context, request *runtimehooksv1.BeforeClusterCreateRequest, response *runtimehooksv1.BeforeClusterCreateResponse) {

log := ctrl.LoggerFrom(ctx)

log.Info("BeforeClusterCreate is called")

// Your implementation

}

func DoBeforeClusterUpgrade(ctx context.Context, request *runtimehooksv1.BeforeClusterUpgradeRequest, response *runtimehooksv1.BeforeClusterUpgradeResponse) {

log := ctrl.LoggerFrom(ctx)

log.Info("BeforeClusterUpgrade is called")

// Your implementation

}

For a full example see our test extension.

Please note that a Runtime Extension server can serve multiple Runtime Hooks (in the example above

BeforeClusterCreate and BeforeClusterUpgrade) at the same time. Each of them are handled at a different path, like the

Kubernetes API server does for different API resources. The exact format of those paths is handled by the server

automatically in accordance to the OpenAPI specification of the Runtime Hooks.

There is an additional Discovery endpoint which is automatically served by the Server. The Discovery endpoint

returns a list of extension handlers to inform Cluster API which Runtime Hooks are implemented by this

Runtime Extension server.

Please note that Cluster API is only able to enforce the correct request and response types as defined by a Runtime Hook version. Developers are fully responsible for all other elements of the design of a Runtime Extension implementation, including:

- To choose which programming language to use; please note that Golang is the language of choice, and we are not planning to test or provide tooling and libraries for other languages. Nevertheless, given that we rely on Open API and plain HTTPS calls, other languages should just work but support will be provided at best effort.

- To choose if a dedicated or a shared HTTPS Server is used for the Runtime Extension (it can be e.g. also used to serve a metric endpoint).

When using Golang the Runtime Extension developer can benefit from the following packages (provided by the

sigs.k8s.io/cluster-api module) as shown in the example above:

exp/runtime/hooks/api/v1alpha1contains the Runtime Hook Golang API types, which are also used to generate the OpenAPI specification.exp/runtime/catalogprovides theCatalogobject to register Runtime Hook definitions. TheCatalogis then used by theserverpackage to handle requests.Catalogis similar to theruntime.Schemeof thek8s.io/apimachinery/pkg/runtimepackage, but it is designed to store Runtime Hook registrations.exp/runtime/serverprovides aServerobject which makes it easy to implement a Runtime Extension server. TheServerwill automatically handle tasks like Marshalling/Unmarshalling requests and responses. A Runtime Extension developer only has to implement a strongly typed function that contains the actual logic.

Guidelines

While writing a Runtime Extension the following important guidelines must be considered:

Timeouts

Runtime Extension processing adds to reconcile durations of Cluster API controllers. They should respond to requests as quickly as possible, typically in milliseconds. Runtime Extension developers can decide how long the Cluster API Runtime should wait for a Runtime Extension to respond before treating the call as a failure (max is 30s) by returning the timeout during discovery. Of course a Runtime Extension can trigger long-running tasks in the background, but they shouldn’t block synchronously.

Availability

Runtime Extension failure could result in errors in handling the workload clusters lifecycle, and so the implementation should be robust, have proper error handling, avoid panics, etc. Failure policies can be set up to mitigate the negative impact of a Runtime Extension on the Cluster API Runtime, but this option can’t be used in all cases (see Error Management).

Blocking Hooks

A Runtime Hook can be defined as “blocking” - e.g. the BeforeClusterUpgrade hook allows a Runtime Extension

to prevent the upgrade from starting. A Runtime Extension registered for the BeforeClusterUpgrade hook

can block by returning a non-zero retryAfterSeconds value. Following consideration apply:

- The system might decide to retry the same Runtime Extension even before the

retryAfterSecondsperiod expires, e.g. due to other changes in the Cluster, soretryAfterSecondsshould be considered as an approximate maximum time before the next reconcile. - If there is more than one Runtime Extension registered for the same Runtime Hook and more than one returns

retryAfterSeconds, the shortest non-zero value will be used. - If there is more than one Runtime Extension registered for the same Runtime Hook and at least one returns

retryAfterSeconds, all Runtime Extensions will be called again.

Detailed description of what “blocking” means for each specific Runtime Hooks is documented case by case in the hook-specific implementation documentation (e.g. Implementing Lifecycle Hook Runtime Extensions).

Side Effects

It is recommended that Runtime Extensions should avoid side effects if possible, which means they should operate only on the content of the request sent to them, and not make out-of-band changes. If side effects are required, rules defined in the following sections apply.

Idempotence

An idempotent Runtime Extension is able to succeed even in case it has already been completed before (the Runtime Extension checks current state and changes it only if necessary). This is necessary because a Runtime Extension may be called many times after it already succeeded because other Runtime Extensions for the same hook may not succeed in the same reconcile.

A practical example that explains why idempotence is relevant is the fact that extensions could be called more than once for the same lifecycle transition, e.g.

- Two Runtime Extensions are registered for the

BeforeClusterUpgradehook. - Before a Cluster upgrade is started both extensions are called, but one of them temporarily blocks the operation by asking to retry after 30 seconds.

- After 30 seconds the system retries the lifecycle transition, and both extensions are called again to re-evaluate if it is now possible to proceed with the Cluster upgrade.

Avoid dependencies

Each Runtime Extension should accomplish its task without depending on other Runtime Extensions. Introducing dependencies across Runtime Extensions makes the system fragile, and it is probably a consequence of poor “Separation of Concerns” between extensions.

Deterministic result

A deterministic Runtime Extension is implemented in such a way that given the same input it will always return the same output.

Some Runtime Hooks, e.g. like external patches, might explicitly request for corresponding Runtime Extensions to support this property. But we encourage developers to follow this pattern more generally given that it fits well with practices like unit testing and generally makes the entire system more predictable and easier to troubleshoot.

Error messages

RuntimeExtension authors should be aware that error messages are surfaced as a conditions in Kubernetes resources and recorded in Cluster API controller’s logs. As a consequence:

- Error message must not contain any sensitive information.

- Error message must be deterministic, and must avoid to including timestamps or values changing at every call.

- Error message must not contain external errors when it’s not clear if those errors are deterministic (e.g. errors return from cloud APIs).

Caution

If an error message is not deterministic and it changes at every call even if the problem is the same, it could lead to to Kubernetes resources conditions continuously changing, and this generates a denial attack to controllers processing those resource that might impact system stability.

ExtensionConfig

To register your runtime extension apply the ExtensionConfig resource in the management cluster, including your CA

certs, ClusterIP service associated with the app and namespace, and the target namespace for the given extension. Once

created, the extension will detect the associated service and discover the associated Hooks. For clarification, you can

check the status of the ExtensionConfig. Below is an example of ExtensionConfig -

apiVersion: runtime.cluster.x-k8s.io/v1alpha1

kind: ExtensionConfig

metadata:

annotations:

runtime.cluster.x-k8s.io/inject-ca-from-secret: default/test-runtime-sdk-svc-cert

name: test-runtime-sdk-extensionconfig

spec:

clientConfig:

service: